Turbo Tools V4 - Turbo Render, Temporal Stabilizer, & Turbo Comp

Major Feature Release - Turbo Tools V4 now with Temporal Intelligence added to the Temporal Stabilizer + 700% faster split channel stabilisation!

Turbo Tools is an easy to use addon that allows you to render at much lower samples and still get great results. This ability to use lower samples results in faster renders, and thanks to the temporal stabiliser it's suitable for animations too. No more fiddling about trying to find the best samples, you just choose the quality and speed you need, and it'll set it all up for you. There are also a host of features to speed up post production, and it's compatible with all hardware and operating systems supported by Blender.

Overview video showing all main features:

The three main areas of the addon are:

- Turbo Render - Vastly superior render results in a fraction of the time for both CPU and GPU Cycles rendering. Retains image quality the standard denoisers can't maintain, in some cases even when providing them with images rendered for 120X longer! For complex scenes you can expect your single frame renders to be reduced from several hours to several minutes! Check out the below video to see how Turbo Render was able to get a gtx 1070 to destroy an rtx 4090!

- Temporal Stabilizer - This massive feature provides per pass temporal stabilization, allowing denoising flicker to be removed from animations that otherwise would need hugely longer render times to avoid! Check out the video below to find out how easy and effective it is, and also see the new Temporal Intelligence features added in Turbo Tools version 4 that allow it to tackle even the most complex of animations with both speed and ease:

-

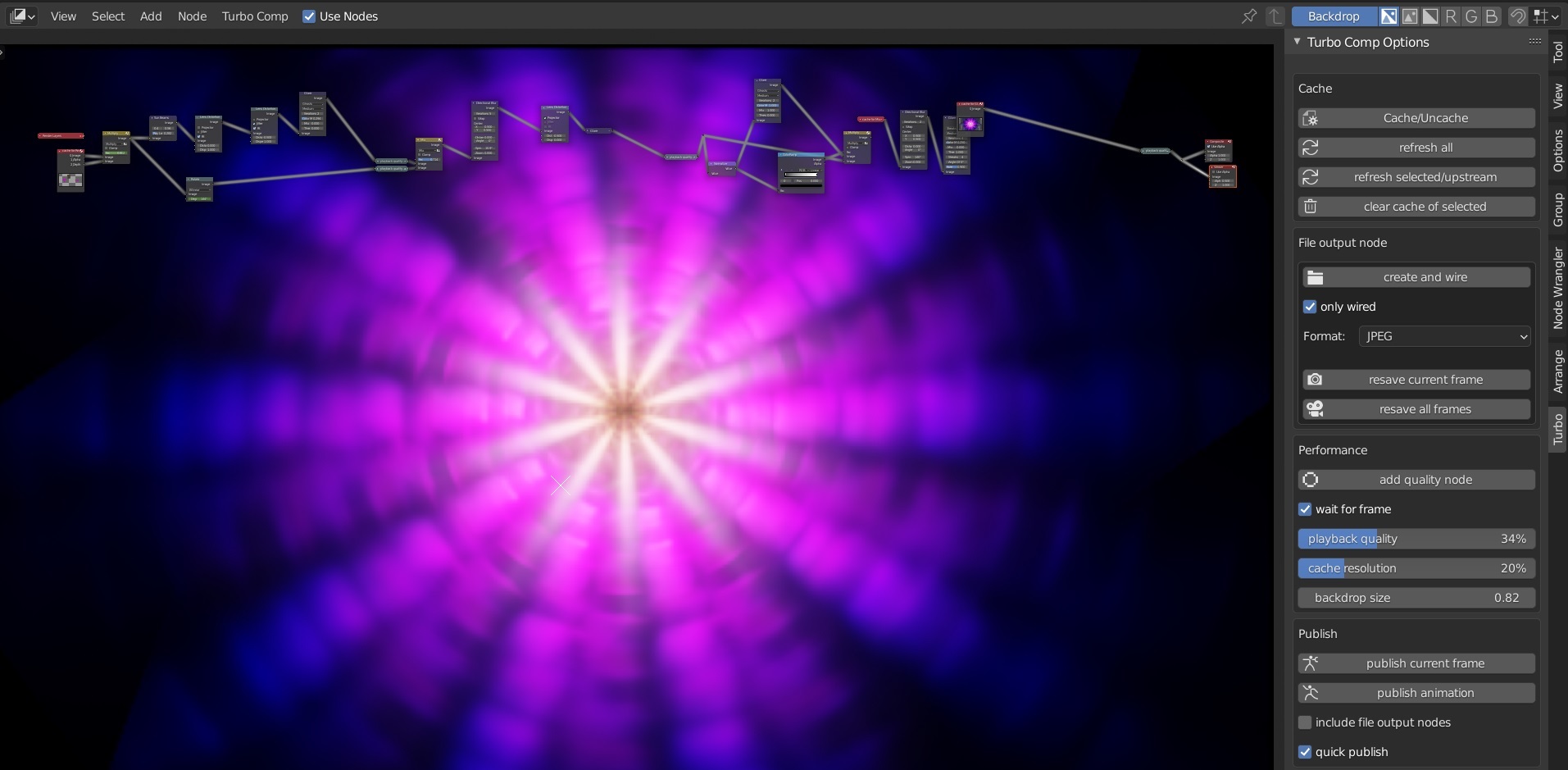

Turbo Comp - A full compositor suite with real-time playback and compositing directly in the compositor backdrop, branch caching, resaving of file output nodes without re-rendering, automatic file output node creation, publishing of multiple compositions with ease, and lots more.

TURBO RENDER

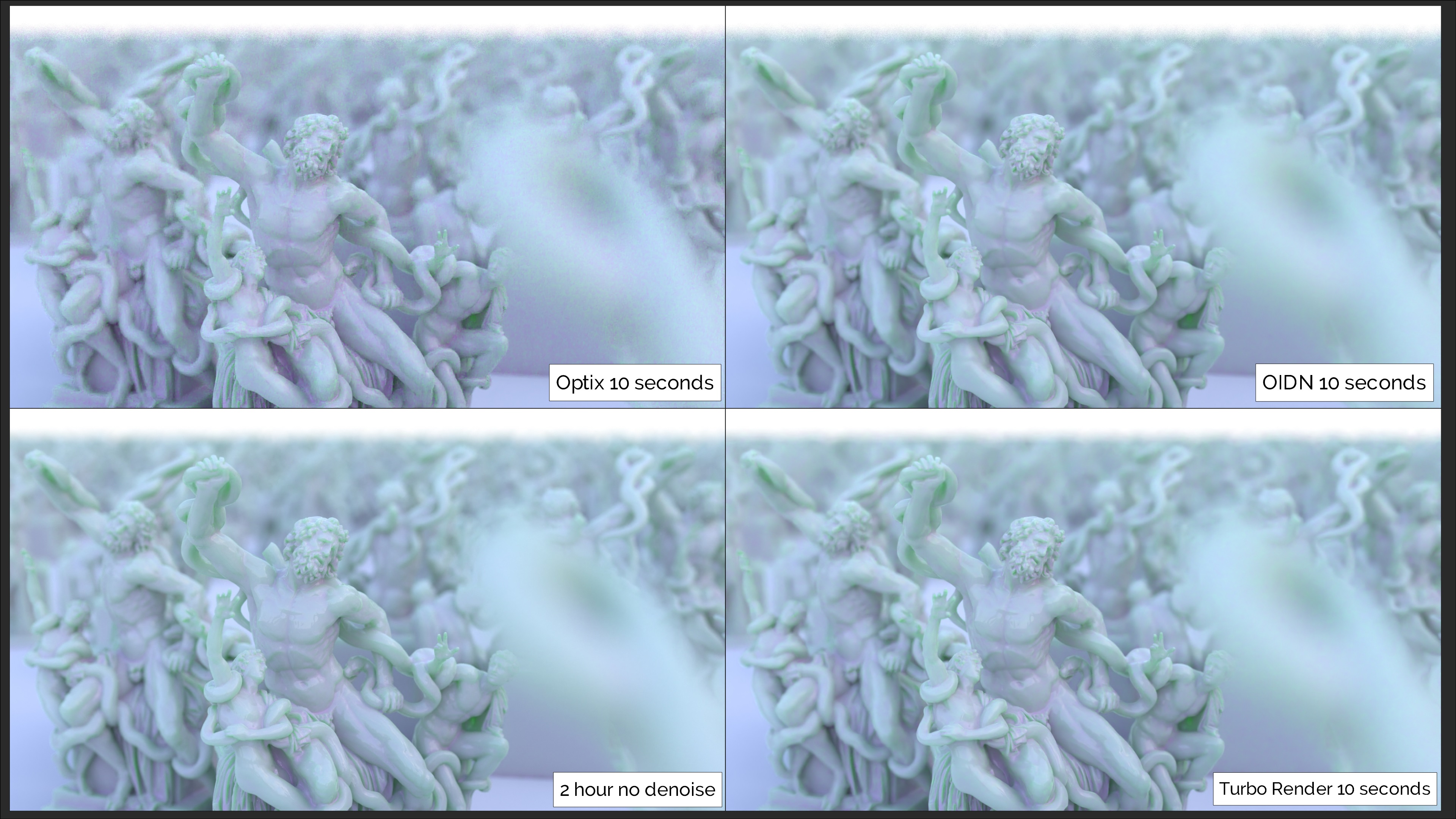

In difficult to render scenes (indoor scenes, volumes, scenes containing glossy materials with detailed textures, SSS, etc) Turbo Render provides results that OIDN/Optix could require you to render up to 120x longer in order to match Turbo Render's results. When no denoiser is used you could expect to render up to 960x longer to achieve the same results produced by Turbo Render (scene dependant). Additionally it simplifies the entire rendering process thanks to render setting pre-sets designed to help you get the result you need without diving into the complex render settings. It even works with complex compositing setups without the need to do any rewiring yourself! The results are remarkable as can be seen below.

Classroom scene using the new draft mode. Total render time (including denoising) was under 17 seconds on a gtx1070 with an i7-7700k !!!!!!!!

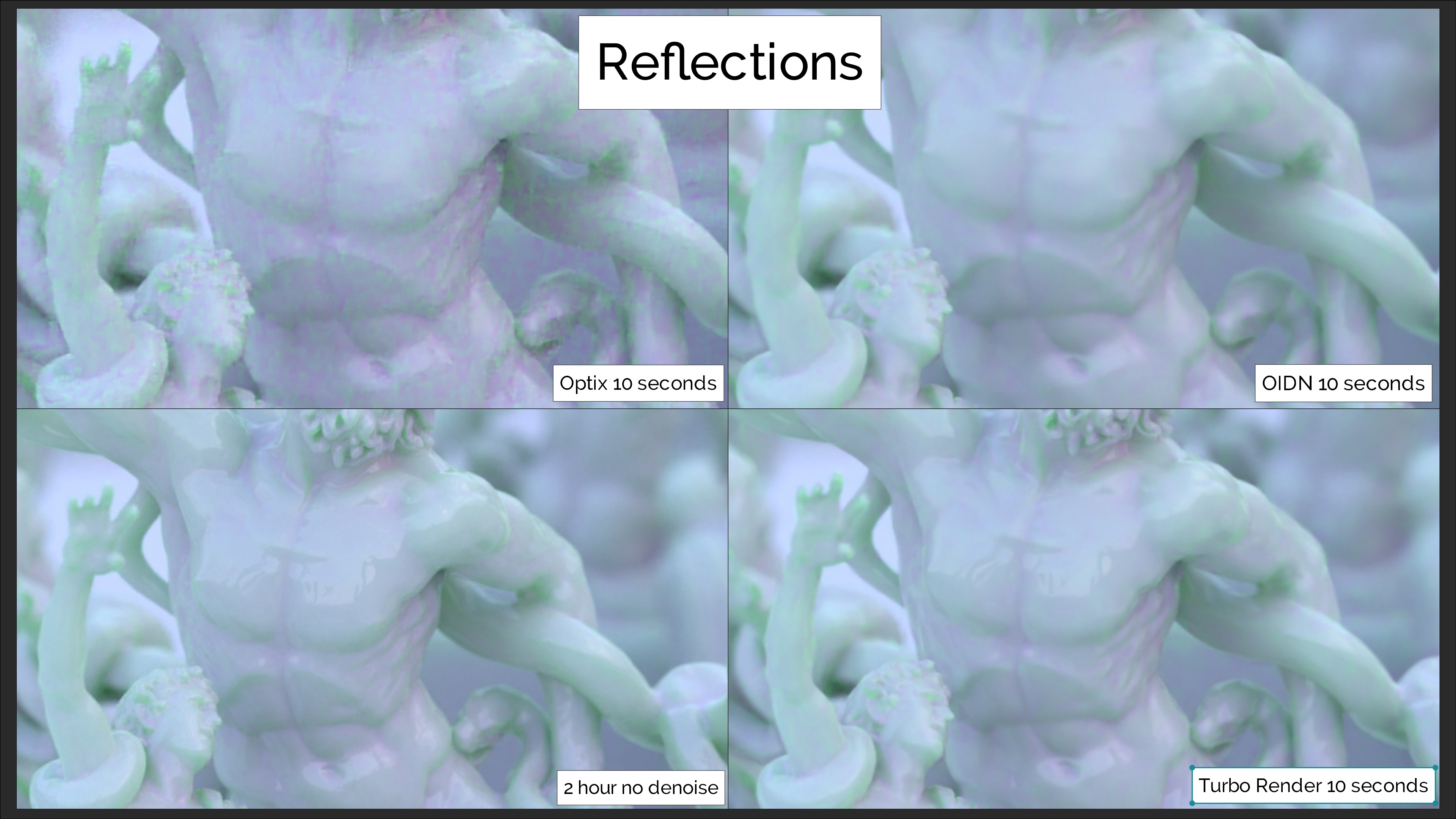

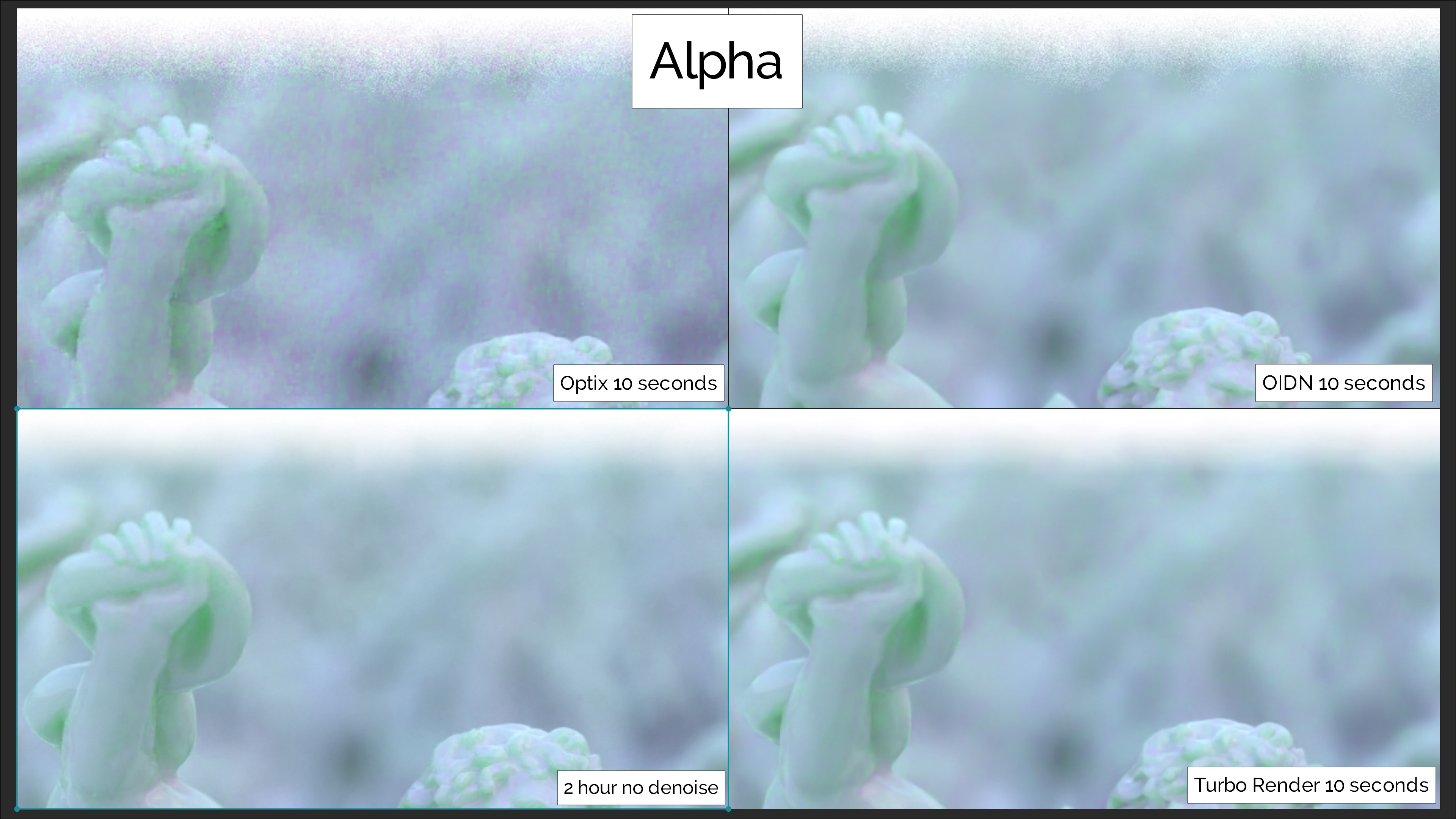

All examples rendered using a GTX 1070. Where there are comparisons, OptiX and OIDN were always set to the most accurate settings.

Scene 1 - heavy DOF , strong SSS, film set to transparent to test alpha.

Click here to download test scene 1

Test scene 2 Available from the shop.

Turbo render is able to maintain reflection clarity at extremely low samples. OIDN required over 4 minutes of rendering to achieve a similar quality.

The image is rendered with film set to transparent to produce an alpha channel. The white background (top of the image) is then added in the compositor to highlight the difference in quality. OIDN required a 4 min render to get close to Turbo Render's 10 second render result, but there was still visible noise (video to follow)

Scene 2 - Indoor scene to test geometry detail and texture detail.

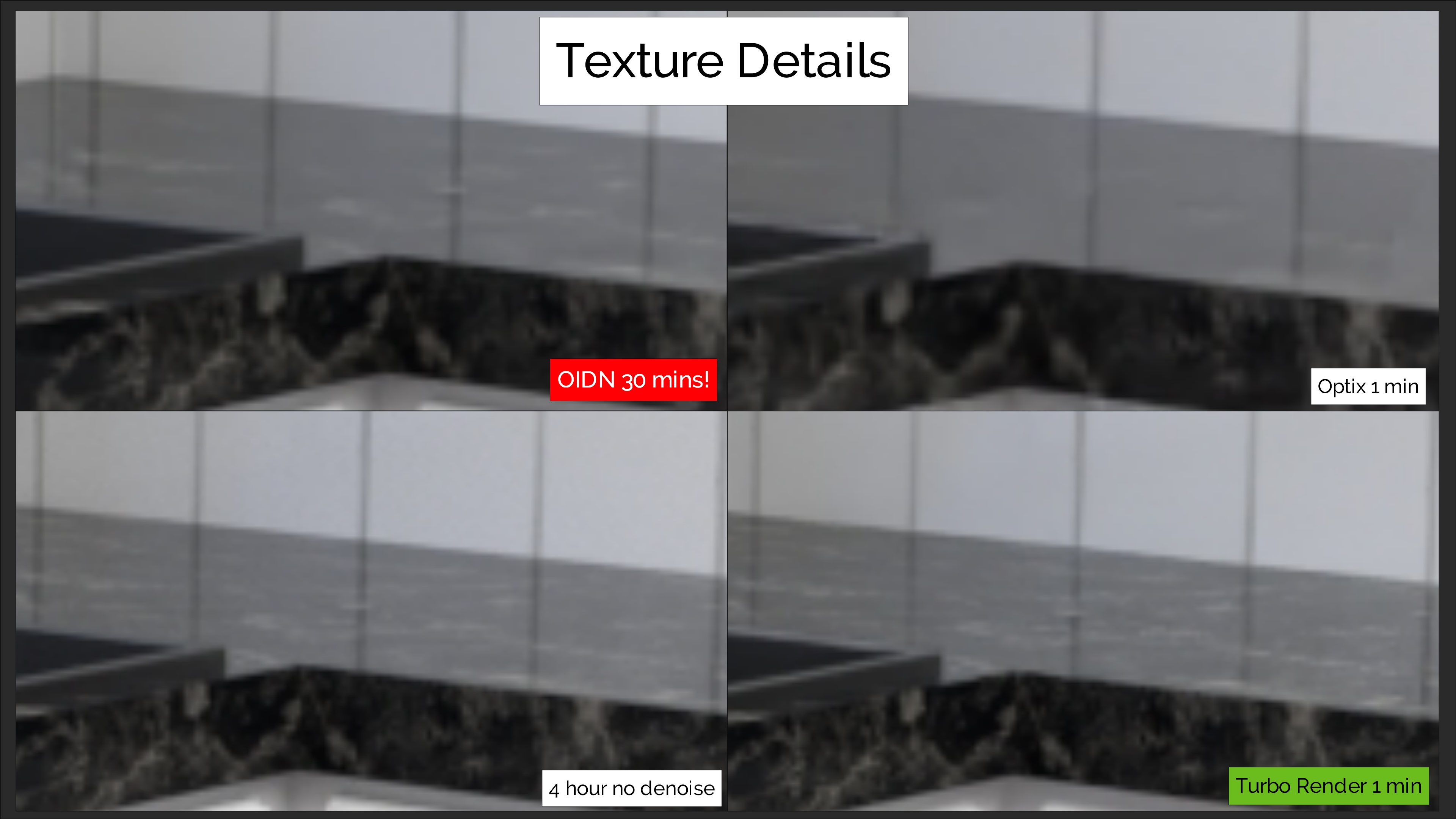

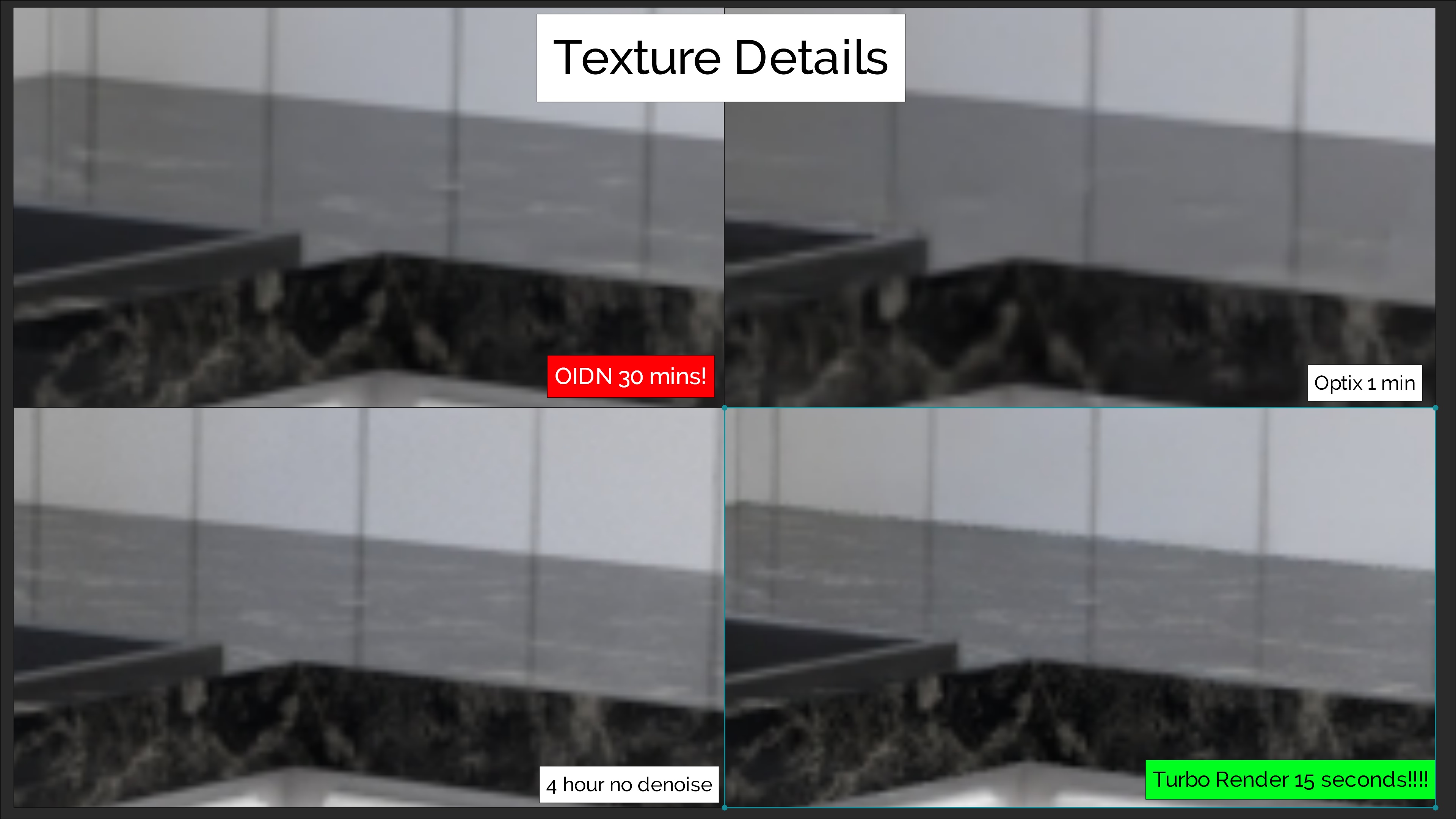

Above we can see that even after 30 minutes of rendering, OIDN is still unable to retain as much texture detail as Turbo Render can at on 1 min!

Even with a 15 second render, that's a whopping 120x faster than the 30 min render used by OIDN, Turbo Render can still maintain texture detail using it's 'Enhanced Textures' option:

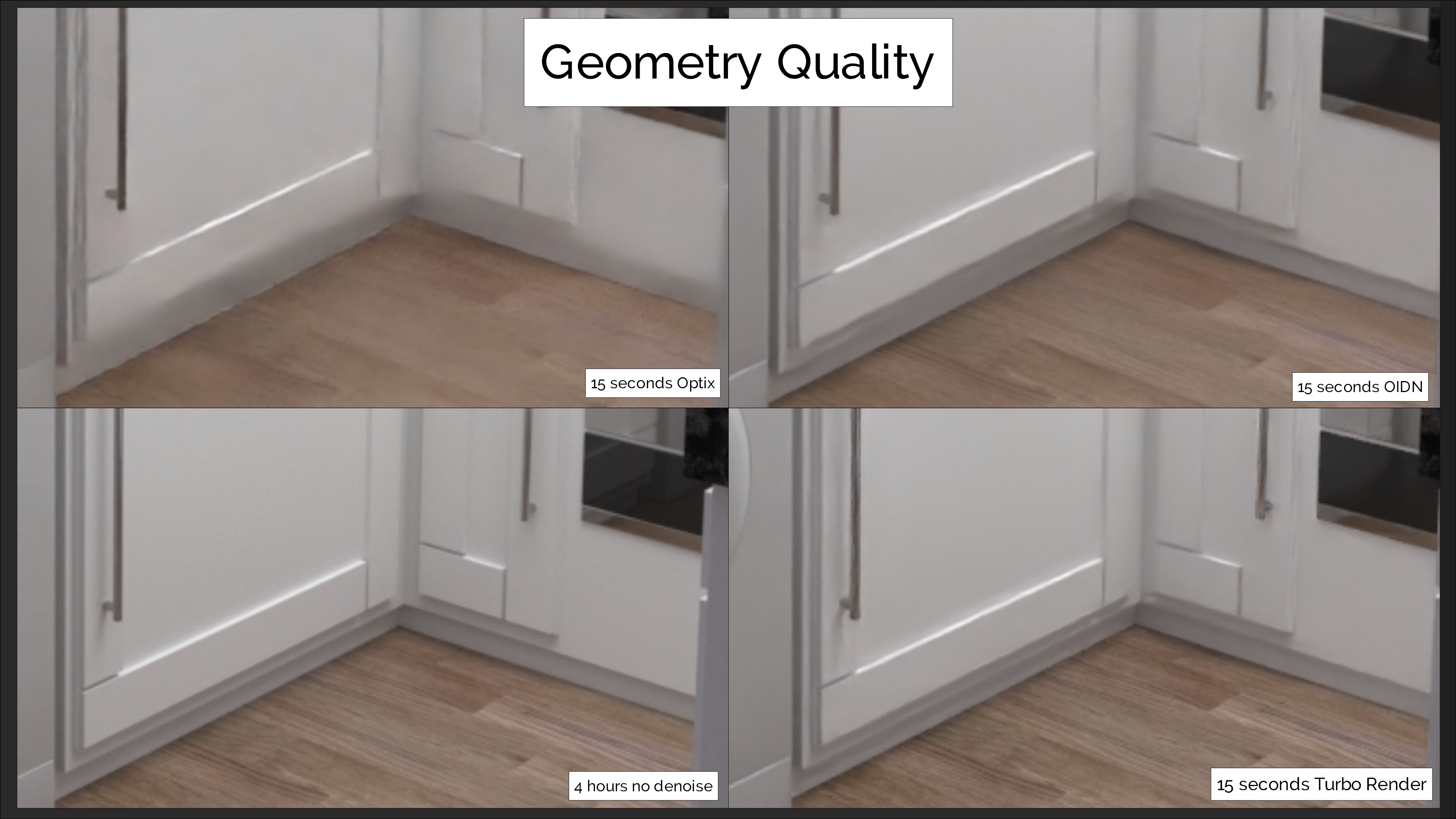

Above we can see that the bottom of the cupboard area is getting quite badly mangled up by OIDN and even worse with OPTIX. This is because denoisers can struggle with dark edges of geometry that meet with shadows due to a lack of contrast. Turbo Render can achieve much better results using it's 'slow (all passes mode), even at very low samples (as shown above bottom right).

Below shows that even when rendering at 1 min per frame (240x less time per frame than the ground truth 4 hours per frame), we can still get excellent results without any noticeable flicker. To do this with OIDN and maintain the same geometry, texture and reflection detail we'd need to render at 30 mins per frame (as can be seen in the above texture comparison still image examples, and youtube video examples athttps://www.youtube.com/watch?v=toManFMUSFQ ).

Notice how turbo render (on the right) has vastly superior image quality when compared to OIDN (left). The most noticeable improvements in this image are the crisp shadows on the notice board and the clarity of the reflections under the desk.

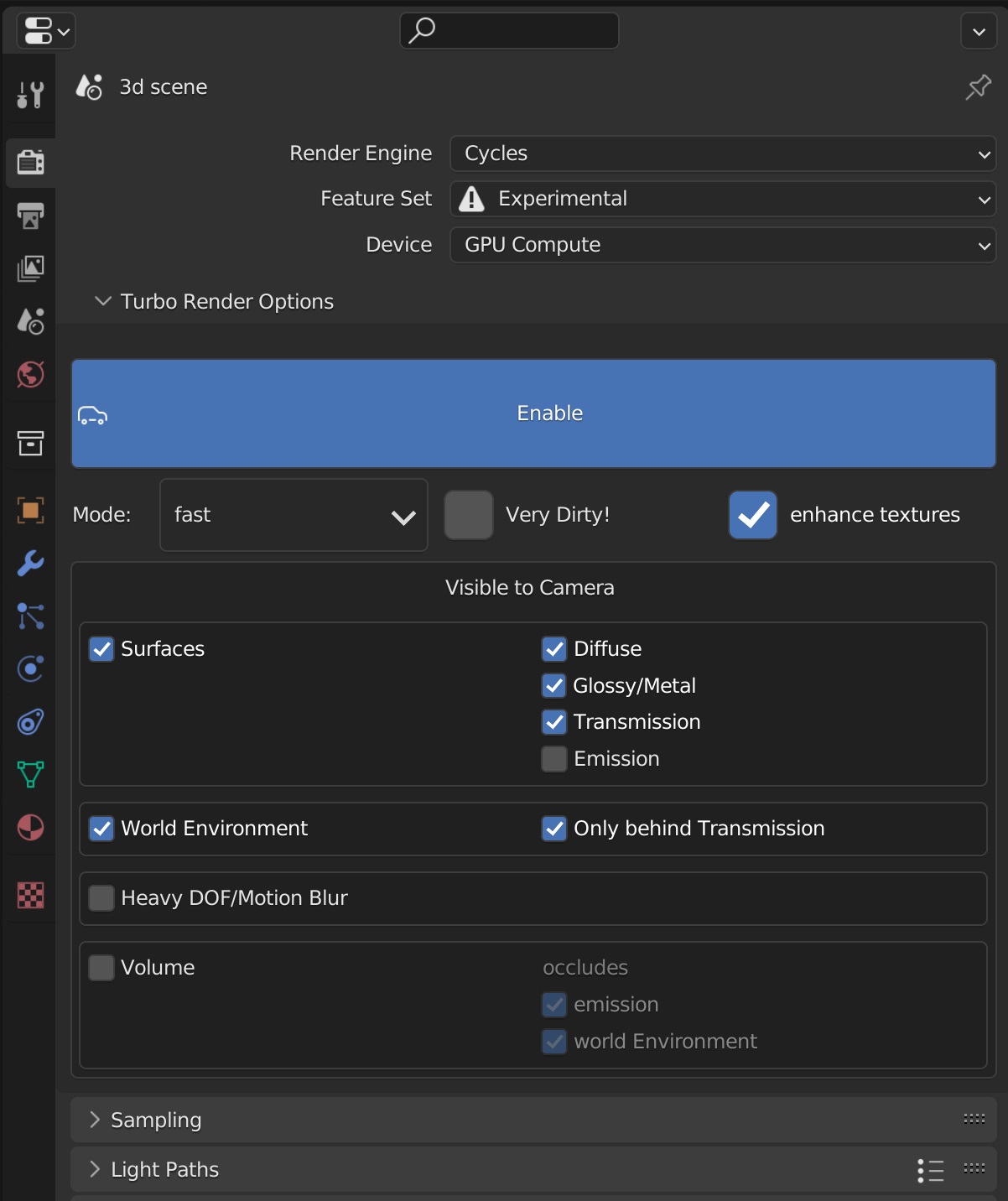

The UI is very simple to understand, and offers options to ensure the best possible results for any scene and sample settings.

Instructions:Turbo Render is able to reduce render times so dramatically because it can produce high quality renders at samples that are too low for Blender's render panel denoisers to do a good job with:

Open image denoiser version(left) 1024 samples with noise threshold of 0.01. Turbo version (right) 324 samples with noise threshold 0.1 (ten times more noise). Notice the paint flecks are retained with Turbo, and the skin detail is completely preserved compared to the OIDN blurry results. (right click open images in new tab to see full size image)

With this in mind, always be sure to set the samples as low as you can without reducing image quality. You can do this either by using the sample pre-sets, or using the 'user' sample pre-set mode which allows you to enter the sample settings manually.

It's also important to understand that the biggest gains (often several hours) are to be had on renders that take a long time when not using Turbo Render. Scenes that already render quickly (under a minute or two) can't be reduced as significantly. For scenes that already render quickly it's advisable to use the 'fast/draft' denoise mode unless you notice wobbly geometry, or if you need to work with the individually denoised passes in the compositor after rendering). Here's a video explaining the draft/fast mode usage: https://www.youtube.com/watch?v=HzCm_vp6R2M

Turbo Render is designed with ease of use being a top priority. In the Turbo Render Options area, simply click enable, choose a sample preset to automatically set up the sample settings to the desired quality, choose the cleaning mode (combined image or clean passes), tell it what's in the scene, and render. That's it! Turbo render will then analyse the scene and other render settings to produce the final render. IMPORTANT ensure a default project cache folder for new scenes is specified in the addon preferences, and that each scene has a valid cache folder set prior to rendering. The scene's cache folder can be set at the bottom of the Turbo Render options, or in the compositor's N panel turbo tab. If you the 'User' sample pre-set, then to achieve the biggest speed gains, you should reduce your samples below what you would usually need. Turbo Render is so fast because it's able to produce clean images from noisier render results than other denoisers can. The cache folder must not be a relative path (a path that starts with '//'), so when setting it, make sure you uncheck the relative option in the Blender file explorer as shown below:

Turbo Render is compatible with:

- Still renders

- Animations

- Existing compositor trees (no matter how complicated)

- Rendering of multiple scenes and view layers from a single compositor (all scenes will use their own Turbo render settings).

- All Turbo Comp features (caching, publishing, resaving file output nodes without re-rendering etc)

Options:

Denoising Modes -

- Draft (clean image pass only) - The new draft mode offers extremely fast denoising, and when combined with the 'enhance textures' option will provide results that could be considered to far surpass what would be expected of a draft render, even at extremely low samples. Also suitable for final renders which render to a denoisable level in under 10 seconds, thanks to it's fast processing times.

- Medium (clean image pass only) - The medium option is slightly slower than Draft, but on noisier images will offer better all round detail, particularly in reflections.

- High (clean image pass only) - The high mode will offer the best lighting, shadows and reflections of all but the ultra mode. This is suitable for final renders where the individually denoised passes aren't needed for compositing.

-

Ultra(cleans all necessary passes)- This will clean individual passes for use in the compositor afterwards, and can also be used to resolve any geometry wobbliness present in lower pre-sets. The passes which are cleaned is decided by scene content and render settings. This mode can also be used if the other modes are not producing good geometry detail. IMPORTANT - If you intend to use the individually cleaned passes in a 3rd party compositor, you can either use the render layer cache exr's found in the cache folder you set, or if you prefer another format, you can set up a file output node directly from the render layer node. Blender's output can be set to something light such as a ffmpeg because it's only able to output the denoised image pass, not the other denoised passes. If your passes include data passes such as cryptomattes, it's advisable to use EXR 32 bit.

Cycles Speedup Options -

- Optimise HDRI - This will optimise the importance map, leading to potentially faster renders and less RAM usage. The improvement will be most noticeable with HDRI world environments which are 8k or above, and will reduce memory consumption during rendering by around 1GB for a 16k HDRI.

- Prevent Fireflies - Recommended. This will reduce the chance of fireflies and also provide a potentially massive render time reduction (scene dependant).

Sample Pre-sets - A quality level for every occasion. No more messing about trying to find the ideal render sample settings, simply choose the desired quality and hit render. Modes include: Crap, Medium, High, Ultra, Insane, User. For animations you may want to use your own sample settings, as these have been optimised for fast still images. Using them for animation may cause inconsistencies between frames.

Recommended minimum sample presets to use for each denoise mode:

Very Dirty - Very dirty should be used if you're using very low samples or still have noise in the image after it's been denoised.

Interior Scene - Adjusts the render pre-sets to produce better results for interior scenes or scenes with a lot of indirect illumination.

Animation- This will optimise your chosen sample pre-sets for animations.

Enhance Textures - This will ensure that texture detail is maintained even at very low samples. In draft mode it will also improve reflections and shadows.

Visible to Camera - Use these options to specify what the camera will be able to see. This in combination with various other render settings (light bounces, film etc) enables the system to choose the optimal approach to ensure the best results. Uncheck what's not needed to ensure the fastest processing time. Turning on Heavy DOF/Motion Blur, Emission, and enabling the 'behind volume' sub options is not always necessary (particularly at higher render settings), so try leaving those off for increased performance, only enabling if you notice noise around the edges of objects, or noise behind volumes.

Problem Solving - Automatically fix common rendering issues if they're visible after rendering. Only enable if you encounter problems, because if the problem isn't present the workarounds will be detrimental to rendering speed or glossy levels (depending which you enable). IMPORTANT - If you enable the 'swirly/blotchy artefacts' option, and you also have the 'animation' option enabled in the turbo render options, then it's advisable to change the sample pre-set to 'user', disable the noise threshold checkbox, and set your own samples. The reason is, when the swirly artefacts option is enabled, adaptive sampling is disabled, meaning the max samples will be used for each pixel, and because the animation options increases the max samples to at least 600 (even for crap sample pre-set), you'll have potentially unnecessarily long render times.

Checklist

- IMPORTANT - Make sure you have selected the lowest possible sample pre-set for your scene. Turbo Render's speed gain is only achieved when using lower samples than you would usually use. Find the optimum setting quickly by rendering a small render region of the noisiest area of the render. Repeatedly render and reduce the samples until you find the lowest number of sample that don't negatively impact image quality, the turn off the render region before rendering properly. For the most control, consider using the 'User' sample preset mode, as this will allow you to optimise noise threshold, min and max samples.

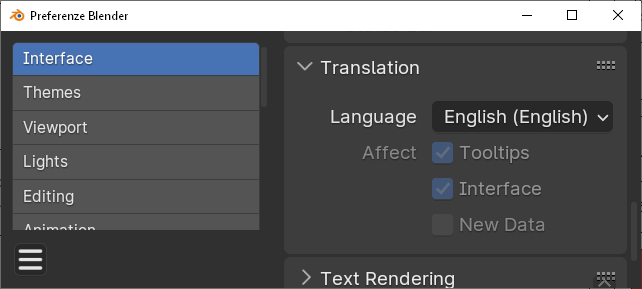

IMPORTANT - If you have Blender set to use a different language, to ensure the addon functions correctly, you need to untick the option to create new data in your selected language. The addon occasionally creates temporary data blocks and then refers to them by name, if you have new data enabled, then the name will be translated to something other than it's looking for, and you'll get an error. You can change the language setting in the Blender preferences:

A render layer cache folder is set, and it's not a relative path. It needs to be an absolute path such as 'c://something/something/' and not '//something/'

- Make sure you have 'very dirty' enabled at low samples, otherwise you'll get noise even after denoising.

- Make sure you put a tick in the visible to camera section for every surface type in the scene. Failure to do this will result in un-denoised elements. Don't tick options that aren't in the scene, otherwise this will increase render time unnecessarily.

- If your camera has strong DOF or motion blur and you notice noise in those areas after rendering, be sure to tick the 'Heavy DOF/Motion Blur' option. You can tell if the scene has DOF if near or far objects are blurry compared to the focal point.

- Avoid enabling 'optimise HDRI' in scenes that don't use a HDRI in the world environment, or if the HDRI is lower resolution than 4096 pixels wide.

- Only use the 'avoid fireflies' option in scenes with very strong lighting or a hdri, otherwise the brightness of highlights and reflections will be impacted.

- Blender's own output is not set to multilayer EXR. If you need multilayer EXR In your third party compositor, you should use the render layer cache generated by turbo render instead.

-

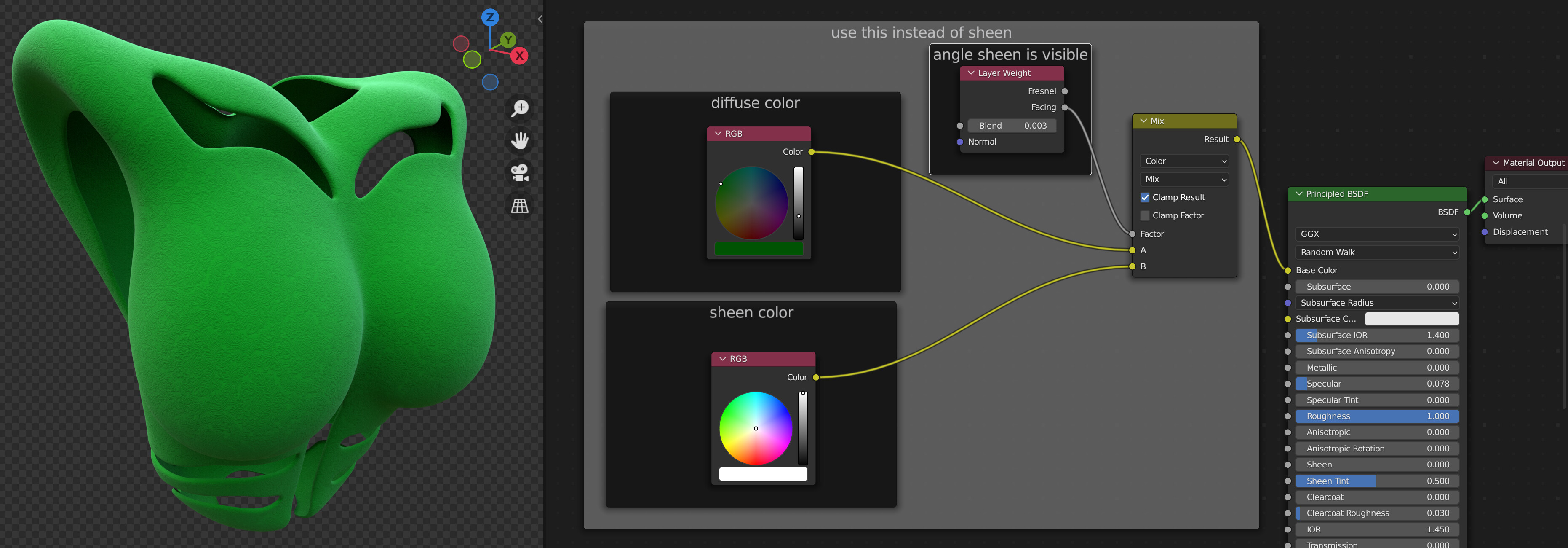

Avoid the principled BSDF's Sheen option for optimal results. You can use a layer weight and a mix node into the base colour instead:

IMPORTANT - if you've already rendered with Turbo Render previously, check the compositor node tree doesn't have any small minimised nodes covering the render layer node. On extremely rare occasions Blender fails to run the queued up python scripts after rendering completes. This results in the turbo render not being able to put the compositor tree back to it's pre-render state which will lead to incorrect render results when next rendering. It's extremely rare, and only usually happens if system resources are dangerously low. If it happens, remove the additional nodes, check the links are correct, and turn off any render passes that shouldn't be on. See troubleshooting for more details.

The new Draft mode is able to produce surprisingly good results even at extremly low samples. This is the classroom scene rendered in 16 seconds on a gtx 1070 using only 12 samples! On a more modern GPU such as a mid range 3070, this would complete in under 5 seconds based on Blender's benchmark website!

Command line rendering

To render from the command line use:

Animation:

blender -b "E:\blender\benchmark scenes\classroom\Classroom.blend" --python-expr "import bpy; bpy.ops.threedi.render_animation()"

Still image:

blender -b "E:\blender\benchmark scenes\classroom\Classroom.blend" --python-expr "import bpy; bpy.ops.threedi.render_still()"

Alternatively you can use the included script (command line rendering script(TT 3.0.5 or above.py) which can be found with instructions in the download area. The script allows for multi machine rendering and temporalizing.

If you want to set other things such as output directory, frame range, etc, then refer to:

https://docs.blender.org/manual/en/latest/advanced/command_line/render.html#single-image

Full Video demonstration showing Turbo Render and Turbo Comp here:

Limitations

Due to a Blender limitation/Bug, it's not currently possible for the addon to support Fast GI Add mode. Bug report here: https://projects.blender.org/blender/blender/issues/105332

Recommended Minimum System Requirements

CPU - Quad Core that runs at over 4ghz (The processing is multithreaded, so the more cores the better. Anything below 4ghz will still work, but the processing times may be unacceptable on low core count machines)

Ram - for a 1080p render, 3gb to 4gb of memory should be free prior to starting rendering (This memory requirement will quadruple each time you double the render resolution. For example 4k renders will require approximately 12gb to 16gb of free ram. If your system has paging turned on, then when ram is depleted the hard drive will be used instead, which will be very slow)

Operating system - Any

Hard Drive Space - Sufficient space for the cache files (at 16 bit, approx 50MB per render layer node per frame + 5MB per standard 30% cache node)

Turbo Comp

Turbo Comp provides massive compositor speed-ups thanks to an intuitive caching system. It's also where you publish your final composition and remove temporal flicker from animations.

Turbo Comp:

An intuitive collection of compositor tools to massively speed up your workflow.

The blender compositor is where you can take a turd and polish it into something exceptional. The pros don't spend hours trying to get everything right in the 3d viewport, or spend huge amounts of time re-rendering every time a client needs an amendment. Instead the majority of the work to get a render looking incredible, is carried out in the compositor. This is why at the last Blender conference, the entire audience voted that the compositor be the next focus for development.

V1 of this addon addresses many of those needs by adding :

Caching

Cache entire branches of a node tree to ensure fast performance.

Real-time Compositing

Add, tweak, and cache all in real time.

Real-time Graph Editor

modify graph editor curves in real-time via the graph editor for real time automation!

File Output Node Tools

One of the longest requested features for blender is to be able to resave a file output node without having to re-render the 3d scene....well now you can! Even better, this feature isn't limited to single frames, you can actually resave all frames in a single click.

Not only that, you can also press a single button to generate and wire up file output nodes with the output destination automatically populated too!

Publishing

One click publishing temporarily discards any cache and exports your node tree at full quality to a movie file or image sequence. This can be done as many times as necessary as it doesn't require the 3d scene to be re-rendered. Audio can be included in the resulting file by adding the audio strip to the VSE. With the 'remove temporal flicker' option enabled, animation flicker will also be removed during the publishing process, allowing you to render animations up to 40x faster!

Automatic rewiring of your existing node trees

Hit render, sit back and it's all done for you. Automatically works with even the most complex of existing compositor node tree.

Auto rename group inputs

No more wasting hours renaming group inputs. Select the group, click rename group inputs from source option, and you're done!

Full Tutorial

Instructions:

- download the addon

- go to the blender preferences -> addon section

- choose to install

- choose the zip file you've downloaded

- IMPORTANT! Set the cache location in the preferences or in the compositors N panel. This should be a non system drive for maximum performance and stability. Ensure this is set to something unique for each project to avoid overwriting any cache you haven't finished with.

- render the scene (the compositor will automatically generate the render layer cache for you.)

- go to the compositor (if you're not already there) and start compositing.

For best performance, place a cache node on a single wire that leads to the composite and viewer nodes before rendering, this will generate lower quality cache in addition to the full quality render layer cache, ensuring faster playback due to it only needing to load the smaller standard cache EXR each frame rather than the full resolution cache EXR.

The Caching System:

Scene Cache Folder

The location that the current scene's cache and standard cache will reside on your computer. This can be accessed from multiple computers at once, for example if one is rendering and you want to begin compositing on a different computer before rendering has finished. This is also a full render management system which allows you to automatically reload the cache in any blend file that has matching credentials (scene name, camera name, viewlayer name). For this reason be careful not to change scene,camera and viewlayer names after rendering, otherwise the cache won't be found.

Animation

Enable this if you want all frames to be cached. When disabled only the latest render cache and standard cache files will be kept.

Validate Cache

When enabled this will check that nodes downstream of the cache node still have the same parameter values, and if not will automatically recalculate the tree and update the cache files for just the necessary frames. This can be very slow, so only use it after adding a new cache node, or if you know the cache is out of date (for example if you've changed a property on a node which is downstream of the cache node). Only applicable when animation is enabled. No need to enable directly after rendering, as all the cache will have been created during rendering so it's guaranteed to be up to date at this point.

If you find that the cache is not being updated for some reason (i.e. if you've changed something other than a node parameter such as resolution), you can select cache nodes and click 'clear cache of selected'. This will delete the cache from disk and force an update.

Cache/Uncache

This will cache the selected nodes at a resolution determined by the 'new cache resolution' slider, or if they're already cached, will uncache them. Avoid caching render layer cache nodes, as this may cause issues with automatic rewiring following a render.

If you press this with a cached render layer node selected (not a render layer cache node), then all render layer cache nodes for this render layer node will be discarded and their links moved back to the render layer node, useful for returning the tree back to it's original state before sharing the file with someone who doesn't have the addon. The render layer cache EXR file will remain on disk to avoid accidentally losing potentially thousands of frames of render data, so these will need to be deleted manually from the specified cache folder. Standard cache node EXR's will be deleted from the cache folder as these can be re-created without the slow process of re-rendering the 3d scene.

Cache Resolution

Determines the resolution of new cache nodes. This will need to be set appropriately for your system to avoid too much frame dropping. On a i7 7700k 30% provided fast FPS when the main render output was set to 1920 x 1080.

Refresh All

This will update all standard cache nodes for the current frame. Will also generate cache nodes for uncached render layer nodes if cache files exist in the cache directory (means you can never lose your work, even after closing blender without saving!).

Refresh Selected/Upstream

This will refresh only the selected cache node and any cache nodes that rely on it upstream. This saves time unnecessarily refreshing downstream nodes that you know are up to date, particularly if those downstream nodes rely on nodes that take a long time to calculate (denoise nodes etc).

Clear Cache of Selected

This will delete the cache from the hard drive but will not remove the cache node. Handy if you need to force the cache to update.

File output node section

You can select a node or multiple nodes, then hit 'create and wire'. This will generate a file output node for each selected node, automatically create the links, and specify a directory and file name to save to.

The only wired option will only wire up the sockets on the selected node, if the socket already has a link.

Resaving

Select the file output nodes that you want to generate new images, and then either click resave current frame, or resave all frames (creates an image sequence for the entire animation length as set in the render properties)

Performance

Sync Mode

If you notice playback is slower than the scene FPS, then you can choose 'frame dropping' from the menu, this will skip some frames to preserve the correct FPS. Ideally this should be set to 'Play Every Frame' if you have 'Validate Cache' enabled, otherwise cache regeneration will become quite sporadic during playback. This will also affect the 3d viewport, so be sure to change back before switch back to the 3d viewport.

Important performance consideration.

- Only enable the passes you actually need. The more passes, the bigger the cache file, and the slower the load time.

- Reduce the tree to a single cache node if possible. This will prevent the render layer cache node which may have many passes from re-loading during playback.

- In the addon preferences, consider lowering the render layer cache bit depth to 16bit, as this will reduce the cache file size considerably, leading to faster load times.

Publish

Publishing generate a new animation from your most recently rendered frames that have an active render layer node present in the compositor , and save the frame or animation with the location and format specified in Blender's output settings. Audio will also be included in any movie formats if you have audio in the VSE (ensure no video is present in the VSE otherwise it will take precedence over the compositor). Animation flicker will also be removed during publishing if you enable the 'remove temporal flicker' option.

Publish Current Frame

Saves the result of the compositor as a still image to the location and format specified Blender's output property panel.

Publish Animation

Saves the result of the compositor as an animation or image sequence to the location and format specified Blender's output property panel.

Remove Temporal Flicker

Removes the flicker in animations caused by denoisers always producing a slightly different results on each frame. Only works for renders that don't use Cycles motion blur (Cycles can't generate the necessary vector data when motion blur is enabled). If you want motion blur, you can add it in the compositor afterwards using a vector blur node (ensure you have vector and depth passes enabled prior to rendering).

Include File Output Nodes

With this option enabled any unmuted file output node will generate a new image sequence at full resolution (handy if you want to generate multiple variations). Note that only the active composite node can generate movie files with audio.

Quick Publish

Quick publish will use the cache to publish without recalculating the tree. For this reason, it's a good idea to drop down a 100% cache node after parts of your node tree that you don't intend to edit after rendering, this will generate cache during the render ( a huge advantage vs taking your renders to a 3rd party compositor).

allow viewer

Image editors set to ‘viewer node’ mode will show the progress of the publish operation. This is extremely useful if temporal stabilsiation is enabled, because it allows you to spot issues immediately rather than having to wait until the entire animation has been processed, so you can quickly find the ideal settings for each scene.

temporal intelligence

a new suite of tools that analyse the render to avoid artifacts, allowing the temporal stabiliser to be used for far more complex animations than was previously possible.

surfaces only

Limits stabilisation to the 3d geometry to avoid artefacts on the world environment and other non geometry elements. The temporalise aliasing setting can be used to control how much of the anti-aliasing between the 3d geometry and world environment gets stabilised. Keeping it quite low avoids jagged edges or incorrectly colour pixels around objects. Also avoids ghosting of objects in the world environment. The temporal data can only be generated for local worlds, so ensure linked worlds are made local if you intend to use this option during publishing.

motion analysis

motion analysis will analsye the movement of geometry across mutliplee frames to avoid all types of artefacts except those in reflections or behind glass.

max deviation

Exclude pixels from temporalisation if they are above this number of pixels away from the expected movement.

smooth

The stabilised pixels will be blended with the unstabilised pixels based on how close to the tolerance threshold they are. If a pixel is close to the tolerance threshold then the pixel will be mostly the original pixel. If the pixel is far below the tolerance, it will be mostly stabilised. Can help avoid obvious transitions. 0.02 is usually a good value

variable tolerance

The tolerance will increase as the distance from the camera increases. Enable if distant objects are still flickering, but you can’t increase the tolerance without introducing artefacts on geometry near to the camera. Avoid if possible as this will increase the time it takes to stabilise each frame. The increment value defines how much the tolerance will increase by per scene unit (measured from the camera to the pixel).

depth perception

Considers the distance of each pixel from the camera across frames. Helps to eliminate general artefacts and artefacts in mirrors and glass

max deviation

Maximum distance a pixel can move away or toward the camera between frames. Greater movement will result in the pixel not being stabilised to avoid artefacts. Too low and nothing will be stabilised, 0.5 is usually a good value.

Smooth and variable tolerance operate the same as described in the motion analysis section above.

surface recognition

Considers the pixels location on the surface of the object. Pixels will only be stabilised if all frames represent the same location on the object’s surface within the specified tolerance. Use if artefacts appear on complex moving objects such as wheels or cogs and motion analysis and/or depth perception are unable to solve. The temporal data can only be generated for objects with editable materials, so ensure all linked materials are made local if you intend to use this option during publishing.

tolerance

Increase to allow surrounding surface points to contribute to stabilisation. The range is 0 to1, with 0 being an exact match, 1 being the full length of the objects surface away (a fractional percentage of the surface area).

Smooth and variable tolerance operate the same as described in the motion analysis section above.

object recognition

Makes sure that all pixels being considered for stabilisation belong to the same object. Helps to avoid ghosting of one object onto other objects as they pass over each other. Should generally never be necessary, but may prove useful if an objects motion is exceptionally odd, and motion analysis and depth perception are unable to fix. The temporal data can only be generated for objects with editable materials, so ensure all linked materials are made local if you intend to use this option during publishing.

tolerance

a tolerance of 0 might feel like the right choice, because an object should never be mixed with another, but a slight tolerance is usually necessary to avoid artefacts in anti aliased areas where multiple objects might be present.

Smooth and variable tolerance operate the same as described in the motion analysis section above.

correct errors

This is a fix all. It detects stabilisation errors after the fact, and then either fully or partially reverts to the un-stabilised version of the pixel. This is the fastest correction method, but if your animation is very flickery, then in addition to removing artefacts, it could re-introduce flicker if the flicker has been corrected by more than the allowable change!

allowable change

if the stabilisation process has caused a pixel to change by more than this amount, then revert to the unstabilised version of that pixel.

smooth

the correction strength is variable based on the degree of the error. 0 to 1, with 0 meaning completely replace pixels that have changed more than the allowable change, 1 means the more the pixel has changed the more it will revert to the unstabilised pixel.

Exclude from stabilisation with masks

remove artefacts on elements that can’t be fixed, without having to use settings that impact how effective flicker removal is. To do this, make a copy of your render layer cache node (shift d), and then use a mix node to combine the two identical render layer cache nodes. The original should go into the top ‘image’ socket, and the second into the bottom ‘image’ socket. Then use a cryptomatte or any other type of mask to mask out specific objects or materials, and use that to drive the mix node’s fac slider. Before publishing select first render layer cache node. This will ensure that only first node is stabilised, and the mix node will mix back in the second node’s unteporalised pixels specified by the mask. This is a huge time saver because it removes the additional render time needed to render unstabilisable elements seperately if they aren’t noticiable flickery.

View Published Image

This will open the published image file in the image editor.

View Published Image

This will open the published animation in Blender's animation player.

Temporal Troubleshooting

Anti-Aliasing isn't very good around the edges of objects that pass directly of the background environment, or I get ghosting - Tick surfaces only to ensure no ghosting occurs in the environment, or if the ghosting is over other geometry, use the temporal intelligence options to resolve.

Object behind Transparent objects don't stabilise correctly - Increase the alpha threshold property in the viewlayer properties passes section. Making it higher than the transparency value of the material will ensure the objects behind the transparent object generate the vector data necessary for stabilisation to work.

Limitations:

Stabilisation can only work for renders that don't use Cycles motion blur (Cycles can't generate the necessary vector data when motion blur is enabled). If you want motion blur, you can add it in the compositor afterwards using a vector blur node (ensure you have vector and depth passes enabled prior to rendering). Demo of how to do that here: https://youtu.be/bvXkfrCXaV4?si=92GSZIJKmT9kaTbn&t=657 If you want to use Cycles motion blur, then ensure you use sufficient samples to not require stabilisation.

Objects whose motion can't be described by the vector motion pass Blender creates, should be excluded from stabilisation if the error correction options can't resolve without re-introducing fliacker. In this case either us selective stabilisation as described above, or if the problematic object has flicker that needs removing, then render it seperately at higher samples, and then recombine with the stabilised footage.

Choose a product version:

-

$49.99 Individual 1 User

Single Machine

| Sales | 1700+ |

| Customer Ratings | 68 |

| Average Rating | |

| Dev Fund Contributor | |

| Published | about 3 years ago |

| Blender Version | 4.2, 4.1, 4.0, 3.6, 3.5, 3.4, 3.3, 3.2, 3.1, 3.0, 2.93 |

| Render Engine Used | Cycles, Eevee |

| License | GPL |

Have questions before purchasing?

Contact the Creator with your questions right now.

Login to Message